Take back control

Originally posted on Medium.

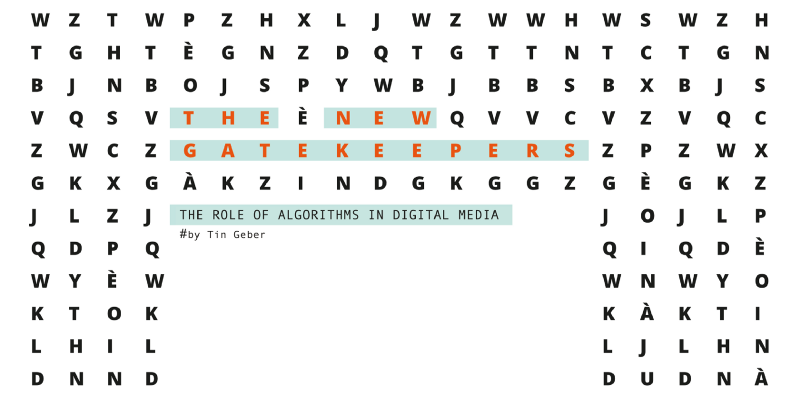

Earlier this year, I published a paper on the role of algorithms in digital media. It explains how letting algorithms decide what is news (rather than journalists) changes the information we see.

In a nutshell, most of the information we access has been filtered and sorted by automated systems at some point — whether on our Facebook wall, through Google searches, or some flavour of “you might also be interested in” or “top picks for you”. There is no way to avoid automated filtering: there is simply too much information in the pipes for humans to manage by themselves. The problem is, we have little or no control over what gets picked for us, or who decides what gets picked. This relationship is also too new for us humans to have developed a basic understanding of the rules that guide this human-machine interaction.

Now, this phenomenon used to scare the beagles out of me — but in a rather abstract, “our conceptual paradigms are shifting” way. Then gamergate happened. And the alt-right, the flat earthers, antivaxers, the manosphere. Brexit. Trump.

I know I’m making lots of assumptions here, and admittedly this is a pretty big basket, but in my opinion there is one common thread: whatever the facts on an issue, rage and extreme perspectives are the things that get amplified.

The fundamental phenomenon is nothing new: we tend to amplify the importance of things we believe in, and knee-jerk against things that challenge our core beliefs. What is different is the scope: we are exposed to dramatically, exponentially higher amounts of echo-chambering information — in a race to the extremist bottom.

Algorithms play a considerable role in this proliferation of vitriol: let’s take Facebook as an example.

Facebook and facts

Facebook gets bad press because it acts as a huge amplifier of fake, angry, divisive news. When a private corporation holds a monopoly over the online social square, they alone decide what is ok and what isn’t — and obviously, the main decision-making driver is profit. And fake news brings lots of cash.

Simple, on the fly fact checking is not only possible, it’s easy to implement. But it doesn’t bring in clicks. Fake news are shared a lot; removing them from the platform would have caused shareholders to lose lots of moneymaking eyeballs. So Facebook hid behind a “neutrality of algorithms” rhetoric to justify a fundamentally immoral pollution of public opinion. And I will bet my Twitter follower count that Facebook had solutions to the fake news problem all along. But it was never in their interest to turn them on.

There’s significant research about the ethical implications of algorithmic decision-making (a good starting point is the reading list at the bottom). The main focus usually stays on the implicit automation of choice, or on human bias interwoven in algorithmic creation. The real danger, in the end, was corporate greed that was given the keys to the cognitive kingdom. In a race to the conceptual bottom, the most common social media platforms like Facebook reduce quality, attention spans and engagement times with the goal of maximising profit. And everyone plays along because that is the new normal. Like CBS chairman Les Moonves, who said that Trump is bad for America, but “damn good for business.”

So where do algorithms come in? Are they just a scapegoat, a convenient straw man for the “not my fault, let’s make money” crowd? Is the loss of signal in the sea of noise an inevitable, ironic result of the internet age? Are we destined to live in a world of fake news, populism and hate?

Tools and weapons

No. Algorithms are an inevitable byproduct of an unprecedented amount of info-bombardment. We need tools to make sense of it all. But that is all algorithms are — tools for us to use or misuse. And oh boy have we been misusing them. From letting only white, rich, western men control the production of algorithms and having mostly men writing them, through relinquishing control to corporate mentality, to legally forbidding anyone to actually peek under the hood of how algorithmic choices are made, we came to live in a society where information filters work in a skewed, top-heavy, exclusive way. Where most of the population isn’t represented, where advertisers can filter by race, where religious views of a single country impose their views of decency to the entire globe. We even trained algorithms to be racist.

We have to take back control

It doesn’t have to be this way. We can demand control. But first we have to understand we lost it. We have to start understanding that there’s a veil of algorithmic mystery inches from our eyes. We have become used to relying on the perceived credibility of algorithmically-driven platforms to provide fact-checked and truthful information. Well now at least it’s clear nothing of the sort is actually happening.

Engage your inner Sagan

What we can do is realise it’s happening, and act accordingly: add a critical, constructive skepticism lens to our world-viewing glasses. For example, the beautifully named Sagan’s standard states that:

Common claims need common evidence; extraordinary claims need extraordinary evidence.

So whenever a news bit hits your filter bubble that’s either too good (or too absurd) to be true, engage your inner Sagan, move that finger away from the “Like” button, put that angry rant on pause, and distance yourself a safe, skeptic’s arm’s length. And do 4 seconds of research:

The truth is usually a quick online search away

There are many non-political fact checking websites out there, like Politifact or Snopes. And while you could make a list of trusted sources and religiously check against those, usually a quick online search through Google, DuckDuckGo or Bing will give you a pretty good overview on whether Trump actually signed an Executive Order banning Saturday Night Live.

(Yes, I am aware that I just asked you to question an algorithm by asking another algorithm)

For extra points, follow a couple of links, and compare the actual content on each. This way you’ll also start a low-key inoculation process against the obviously fake, inflammatory crap, because they all have a similar clickbaity, sensationalist edge to them. And that is because:

Most fake news are in it for the money

Guess what: exploiting public outrage is great business. People earn lots of money by writing utterly absurd fake stories and trolling for profit. Then these stories get amplified by their respective filter bubbles, gain notoriety, and feed the always-hungry-for-scandal mainstream media platforms that turn them into stories, thus completing the path from bullshit to breaking news.

Is there anything we can do about the quality of news? Of course, the most important aspect of quality in a news piece are the journalists themselves: there are amazingly good journalists out there, doing their best to push for quality in a clickbait industry. We all need to pay the bills, and good journalists increasingly have to dedicate most of their time to sensationalism, finding slivers of time to dedicate to hard-hitting journalism. So we can also vote with our wallets:

Support your favourite news platforms and journalists

Yup. Give money. Journalists are increasingly turning to direct funding to detach themselves from advertising control. ProPublica writes consistently high quality investigative journalism pieces, funded through individual and institutional donations. The Organised Crime and Corruption Reporting Project, aka OCCRP (sometimes pronounced as oh-crap, as in “oh crap the OCCRP are coming”) helped break huge corruption stories like the Panama Papers. The Guardian, the Telegraph, the New York Times, the Financial Times, all offer subscription models. Vote with your wallet and starve fake news.

Remember that your eyeballs are cash: each time you share a piece of news, you widen its reach and ensure a trickle of incentive for those platforms to keep publishing articles that get the most clicks. So click, like and share smartly: perhaps, if you want to share a fake news article for the horrible factor, take a screenshot and don’t link to the original.

Let’s grow up together

The Internet is in its teen years. We’re awkwardly testing out our smelly new almost-adult bodies, pushing the boundaries and making our parents furious, and getting into heaps of trouble. We troll, call out, offend and get offended. We hurt and get hurt. We close the doors to our filter bubble and bask in the warmth of sameness. Most of us are still not aware of the very real risks we run, and the long-term damage this might cause.

I think we’re ready to grow out of it. It’ll be so much more rewarding in the long run. We can learn to be kinder, by recognising that we are spun into rage on purpose. We can learn to connect and talk, by recognising we are actively kept apart. We can show, through our actions, that we deserve and demand more: more unity, more quality, more control.

Reading list

- My paper :) The New Gatekeepers: The role of algorithms in digital media

- Literally anything by Zeynep Tufekci

- Maya Ganesh’s paper on machine learning and ethics of driverless cars

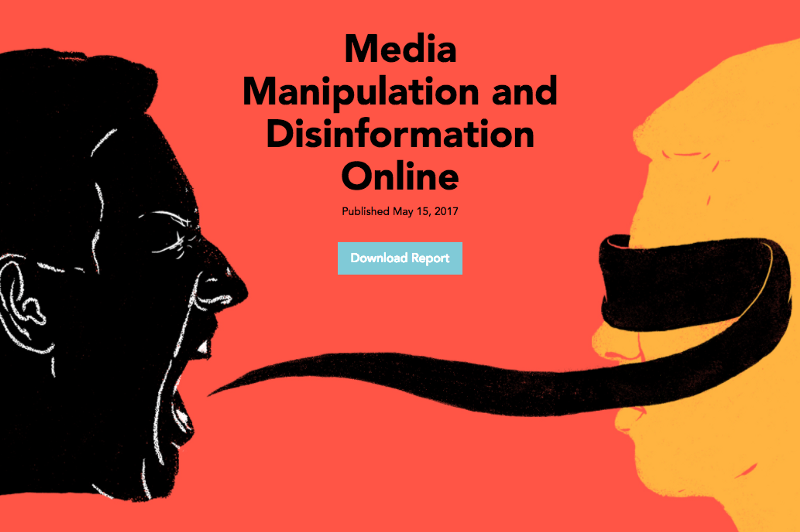

- Data & Society’s report on media manipulation and disinformation online (it is so good)

- The Great British Brexit Robbery, by Carole Cadwalladr for The Guardian

- For a deep dive, check out Tarleton Gillespie unpacking the “algorithm” concept in Keyword: Algorithm

- Literally anything by Kate Crawford

- J. Nathan Matias’s dissertation on governing human and machine behavior in an experimenting society (video) — scroll to 0:43:00 for an interesting example of combating fake news on Reddit

Anything else I should add? Let me know!

.jpg)